Well. Sub-zero is a range. 0K, or absolute zero, is sub 0°C. So sub zero can be equal to absolute zero in one instance of the range.

Well. Sub-zero is a range. 0K, or absolute zero, is sub 0°C. So sub zero can be equal to absolute zero in one instance of the range.

i’m not sure if it’s equivalent. but in the meantime i have cobbled up a series of commands from various forums to do the whole process, and i came up with the following openssl commands.

openssl genrsa -out servorootCA.key 4096

openssl req -x509 -new -nodes -key servorootCA.key -sha256 -days 3650 -out servorootCA.pem

openssl genrsa -out star.servo.internal.key 4096

openssl req -new -key star.servo.internal.key -out star.servo.internal.csr

openssl x509 -req -in star.servo.internal.csr -CA servorootCA.pem -CAkey servorootCA.key -CAcreateserial -out star.servo.internal.crt -days 3650 -sha256 -extfile openssl.cnf -extensions v3_req

with only the crt and key files on the server, while the rest is on a usb stick for keeping them out of the way.

hopefully it’s the same. though i’ll still go through the book out of curiosity… and come to think of it. i do also need to setup calibre :-).

thanks for everything. i’ll have to update the post with the full solution after i’m done, since it turned out to be a lot more messy than anticipated…

Don’t worry. Lemmy is asynchronous after all. Instant responses aren’t expected. Plus. I know life gets in the way :-).

It was basically a misconception I had about how the homelab router would route the connection

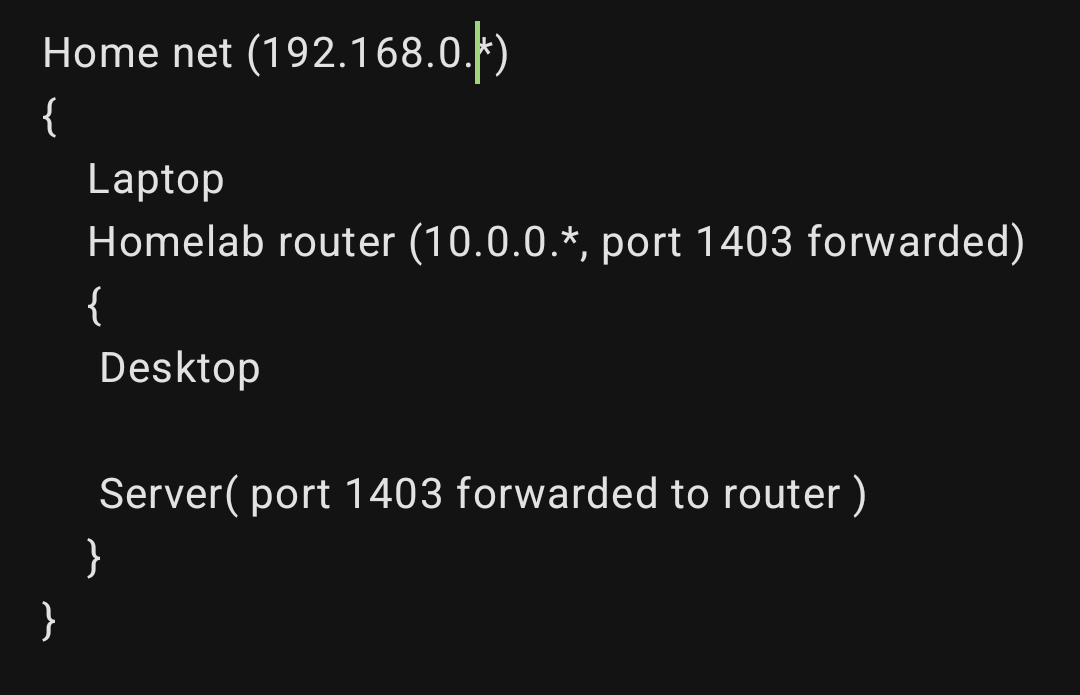

Basically with pihole set up. It routes servo.internal to 192.168.1.y, the IP of the homelab router. So when a machine from the inside of the homelab. On 10.0.0.*, connects to the server. It will refer to it via the 192.168.1.y IP of the router.

The misconception was that I thought all the traffic was going to bounce between the homelab router and the home router. Going through the horrendously slow LAN cable that connects them and crippling the bandwidth between 10.0.0.* machines and the server.

I wanted to setup another pihole server for inside of the homelab. So it would directly connect to the server on it’s 10.0.0.* address instead of the 192.168.1.y. And not go and bounce needlessly between the two routers.

But apparently the homelab router realizes he’s speaking to itself. And routes the data directly to the server. Without passing though the home router and the slower Ethernet. So the issue is nonexistent, and I can use one pihole instance with 192.168.1.y for the server without issue. (Thanks to darkan15 for explaining that).

While I do have my self-learned self-hosted knowledge, I’m not an IT guy, so I may be mistaken here and there.

I think most of us are in a similar situation. Hell. I weld for a living atm :-P.

However, I can give you a diagram on How it works on my setup right now and also gift you a nice ebook to help you setup your mini-CA for your lan :

The diagram would be useful. Considering that rn I’m losing my mind between man pages.

As for the book… I can’t accept. Just give me the name/ISBN and I’ll provide myself. Still. Thanks for the offer.

No, the request would stop on

Router B, and maintain all traffic, on the 10.0.0.* network it would not change subnets, or anything

OK perfect. That was my hiccup. I thought it was going to go the roundabout way and slow the traffic down. I was willing to Put in numbers (masking them with the landing page buttons) if it meant I wouldn’t have to go needlessly through the slower cable. If the router keeps everything inside of it’s own subnet if he realizes he’s talking to itself then it’s perfect.

Thanks for the help

I think I didn’t explain myself the right way.

Computers from inside of Router B will access the server via it’s IP. Nginx will only serve an HTML file with the links for them. Basically acting as a bookmark page for the IP:port combos. While anything from Router A will receive a landing page that has the subdomains, that will be resolved by pihole (exposed to the machines on Router A as an open port on router b) and will make them pass through the proxy.

So basically the DNS will only be used on machines from Router A, and the rules on nginx are just to give links to the reverse proxy if the machine is from router A (I.e. the connection is coming from 10.0.0.1 from the server’s POV, or maybe the server name in the request. I’ll have to mess with nginx), or the page with the raw IP of the server+ port of the service if coming from Router B.

router A is Unfortunately junk from my ISP, and it doesn’t allow me to change the DNS. So I’ll just add Router B ( and thus, the pihole instance that’s on the server) as a primary dns, and an external one as a secondary DNS as fallback.

If you decide on doing the secondary local DNS on the server on

Router Bnetwork, there is no need to loop back, as that DNS will maintain domain lookup and the requests on10.0.0.xall internal toRouter Bnetwork

Wouldn’t this link to the 192.168.0.y address of router B pass through router A, and loop back to router B, routing through the slower cable? Or is the router smart enough to realize he’s just talking to itself and just cut out `router A from the traffic?

I think I’ll do this with one modification. I’ll make nginx serve the landing page with the subdomains when computers from router A try to access. ( by telling nginx to serve the page with the subdomains when contacted by 10.0.0.1) while I’ll serve another landing page that bypasses the proxy, by giving the direct 10.0.0.* IP of the server with the port, for computers inside router B .

Mostly since the Ethernet between router a and b is old. And limits transfers to 10Mbps. So I’d be handicapping computers inside router B by looping back. Especially since everything inside router B is supposed to be safe. And they’ll be the ones transferring most of the data to it.

Thanks for the breakdown. It genuinely helped in understanding the Daedalus-worthy path the connections need to take. I’ll update the post with my final solution and config once I make it work.

I think that pihole would be the best option. But coming to think of it… I think that to make it work I’d need two instances of pihole. Since the server is basically straddling two nats. With the inner router port forwarding port 1403 from the server. Basically:

To let me access the services both from the desktop and the laptop. I’d need to have two DNS resolvers, since for the laptop it needs to resolve to the 192.168.0.* address of the homelab router. While for the desktop it needs to resolve directly to the 10.0.0.* address of the server.

Also, little question. If I do manage to set it up with subdomains. Will all the traffic still go through port 1403? Since the main reason I wanted to setup a proxy was to not turn the homelab’s router into Swiss cheese.

… The rootCA idea though is pretty good… At least I won’t have Firefox nagging me every time I try to access it.

(specially with docker containers !)

Already on it! I’ve made a custom skeleton container image using podman, that when started. It runs a shell script that I customize for each service, while another script gets called via podman exec for all of them by a cronjob to update them. Plus they are all connected to a podman network with manually assigned IPs to let them talk to eachother. Not how you’re supposed to use containers. But hey, it works. Add to that a btrfs cluster, data set to single, metadata set to raid1. So I can lose a disk without losing all of the data. ( they are scrap drives. Storage is prohibitively expensive here) + transparent compression; + cronjob for scrub and decuplication.

I manage with most of the server. But web stuff just locks me up. :'-)

I’ll need to check. I doubt I’ll be able to setup a DNS resolver. Since I can’t risk the whole network going down if the DNS resolver fails. Plus the server will have limited exposure to the home net via the other router.

Still. Thanks for the tips. I’ll update the post with the solution once I figure it out.

If I remember correctly there was an option for that. I need to dig up the manual…

Still, I think I’m going to need to change approach. Eventually one of the other services will bite me if I keep using subdirectories.

I’m sorry. I forgot to mention it in the post. But the server is not facing the outside. It’s just behind an extra nat to keep my computers separate from the rest of the home. There’s no domain name linking to it. I’m not sure if that impacts using subomains.

The SSL certificates shouldn’t be a problem since it’s just a self signed certificate, I’m just using SSL as a peace of mind thing.

I’m sorry if I’m not making sense. It’s the first time I’m working with webservers. And I genuinely have no idea of what I’m doing. Hell. The whole project has basically been a baptism by fire, since it’s my first proper server.

Best i can do is an Elton John style jacket. Dazzle them to hell and back.

In the end i managed by adding two fans under the desk. And wiring them through a switch to one of the free MOLEX. Now it never goes over 77°C. Even at full tilt

The wiggle room on the actual pins saved you :-). I’m frankly surprised lenovo didn’t change pinouts between versions. They are more than capable of that.

It’s a thinkcentre right?

It’s literally a cpu power connector. If you can solder and you have a scrap psu you can jerry rig a new cable. Hell. i made a whole wire loom out of one of those sata ports.

we happy few has entered the chat

When i wake up, my body is immediately ready. But my mind takes a lot longer to fully boot. During that in between time you can talk to me. But my brain won’t register you.

Javascript’s still arriving to the meeting

For the ringtone i have the ringtone from the sip pulsar phone we had in italy while for notifications i have the cascade chime from some doorbell in an ancient condo i worked at. Before that i had the “you got mail” from aol. But it was a bit too esotheric since no one where i live speaks english

They can kill as well. But indiscriminately and slowly.

Acer travelmate 4070. Used as a control unit for a cut and bend machine. (Don’t ask me why)… Holy shit that bastard has outlived around three of the machines that began work with it.

What I’m saying is. Acer was good. But like all things enshittification ruined it.

HP though… I don’t think I’ve ever seen a good hp. Even the ancient ones. I feel like the only HP stuff that saves itself are calculators and some of the old testing equipment